Denoising Autoencoders

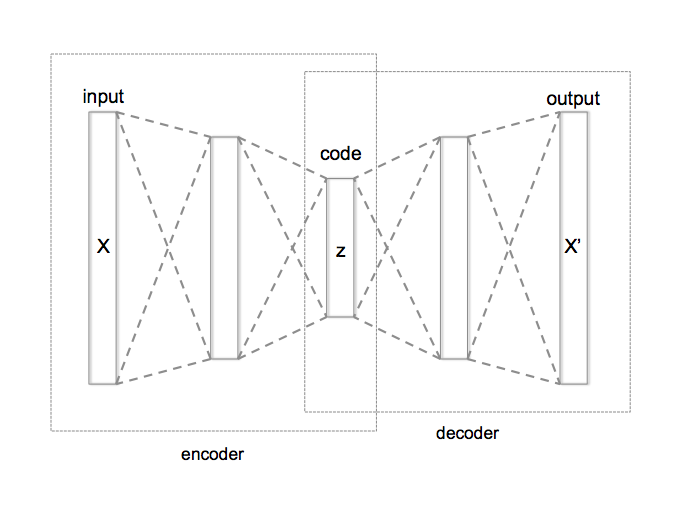

The denoising autoencoder (DAE) is a natural extension of the traditional autoencoder. In fact, an autoencoder can be made into a DAE with a simple modification of the loss function.

Where is the autoencoder parameterized by . The usual autoencoder loss function is

while the DAE loss is

In the case of the DAE the input is assumed to be corrupted by some stochastic process . While is arbitrary, this post will consider Gaussian noise:

Aside from the obvious denoising capabilities of the DAE, training using the DAE loss offers various other benefits. Random noise forces the encoder to learn a robust mapping from input to the latent space. The DAE loss acts as a regularizer since even an overparameterized model cannot simply learn a 1-to-1 mapping of input to output.

Demo

Note: TensorflowJS does not always work on mobile. Use the latest version of Chrome or Firefox on a desktop computer instead.

The image on the left is the input to a DAE trained on the MNIST dataset with gaussian noise. The image on the right is the denoised result that the DAE outputs. Clicking the button above randomizes the input noise and shows how the DAE is robust to random perturbations in the input.

Try it out! Traverse the latent space of a DAE with the sliders and see what the decoder outputs if the encoder had output these two variables. The inputs and outputs to this DAE were 32x32 so these results are pretty good for a 512x reduction in dimensionality.

Source code

References

[1] MNIST dataset http://yann.lecun.com/exdb/mnist/

[2] Pascal Vincent, et al. Extracting and Composing Robust Features with Denoising Autoencoders ICML, 2008.

[3] “14.5 Denoising Autoencoders.” Deep Learning, by Ian Goodfellow, Yoshua Bengio, and Aaron Courville, The MIT Press, 2017, pp. 501–502.